AI algorithms are puzzled by our online behavior during the coronavirus pandemic

At some point, every one of us has had the feeling that online applications like YouTube, Amazon, and Spotify seem to know us better than ourselves, recommending content that we like even before we say it. At the heart of these platforms’ success are artificial intelligence algorithms—or more precisely, machine learning models —that can find intricate patterns in huge sets of data.

Corporations in different sectors leverage the power of machine learning along with the availability of big data and compute resources to bring remarkable enhancement to all sorts of operations, including content recommendation, inventory management, sales forecasting, and fraud detection. Yet, despite their seemingly magical behavior, current AI algorithms are very efficient statistical engines that can predict outcomes as long as they don’t deviate too much from the norm.

But during the coronavirus pandemic, things are anything but normal. We’re working and studying from home, commuting less, shopping more online and less from brick-and-mortar stores, Zooming instead of meeting in person, and doing anything we can to stop the spread of COVID-19.

The coronavirus lockdown has broken many things, including the AI algorithms that seemed to be working so smoothly before. Warehouses that depended on machine learning to keep their stock filled at all times are no longer able to predict the right items that need to be replenished. Fraud detection systems that home in on anomalous behavior are confused by new shopping and spending habits. And shopping recommendations just aren’t as good as they used to be.

How AI algorithms see the world

To better understand why unusual events confound AI algorithms, consider an example. Suppose you’re running a bottled water factory and have several vending machines in different locations. Every day, you distribute your produced water bottles between your vending machines. Your goal is to avoid a situation where one of your machines is stocked with rows of unsold water while others are empty.

In the beginning, you start by evenly distributing your produced water between the machines. But you realize that some machines run out of bottled water faster than others. So, you readjust the quota to allocate more to regions that have more consumption and less to those that sell less.

To better manage the distribution of your water bottles, you decide to create a machine learning algorithm that predicts the sales of each vending machine. You train the AI algorithm on the date, location, and sales of each vending machine. With enough data, the machine learning algorithm will create a formula that can predict how many bottles each of your vending machines will sell on a given day of the year.

This is a very simple machine learning model, and it sees the world through two independent variables: date and location. You soon realize that your AI’s predictions are not very accurate, and it makes a lot of errors. After all, a lot of factors can affect water consumption at any one location.

To improve the model’s performance, you start adding more variables to your data table—or features, in machine learning jargon—including columns for temperature, weather forecast, holiday, workday, school day, and others.

As you retrain the machine learning model, patterns emerge: The vending machine at the museum sells more in summer holidays and less during the rest of the year. The machine at the high school is pretty busy during the academic year and idle during the summer. The vending machine at the park sells more in spring and summer on sunny days. You have more sales at the library during the final exams season.

This new AI algorithm is much more flexible and more resilient to change, and it can predict sales more accurately than the simple machine learning model that was limited to date and location. With this new model, not only are you able to efficiently distribute your produced bottles across your vending machines, but you now have enough surplus to set up a new machine at the mall and another one at the cinema.

This is a very simple description, but most machine learning algorithms, including deep neural networks , basically share the same core concept: a mapping of features to outcomes. But the artificial intelligence algorithms that power the platforms of tech giants use many more features and are trained on huge amounts of data.

For instance, the AI algorithm powering Google’s ad platform takes your browsing history, search queries, mouse hovers, pauses on ads, clicks, and dozens (or maybe hundreds) of other features to serve you ads that you are more likely to click on. Facebook’s AI uses tons of personal information about you, your friends, your browsing habits, your past interactions to serve “engaging content” (a euphemism for stuff that keeps you stuck to your News Feed to show more ads and maximize its revenue). Amazon uses tons of data on shopping habits to predict what else you would like to buy when you’re viewing a pair of sneakers.

How AI algorithms don’t see the world

As much as today’s artificial intelligence algorithms are fascinating, they certainly don’t see or understand the world as we do. More importantly, while they can dig out correlations between variables, machine learning models don’t understand causation .

For instance, we humans can come up with a causal explanation for why the vending machine at the park sells more bottled water during warm, sunny days: People tend to go to parks when it’s warm and sunny, which is why they buy more bottled water from the vending machine. Our AI, however, knows nothing about people and outdoor activities. Its entire world is made up of the few variables it has been trained on and can only find a positive correlation between temperature and sales at the park.

This doesn’t pose a problem as long as nothing unusual happens. But here’s where it becomes problematic: Suppose the ceiling of the museum caves in during the tourism season, and it closes for maintenance. Obviously, people will stop going to the museum until the ceiling is repaired, and no one will purchase water from your vending machine. But according to your AI model, it is mid-July and you should be refilling the machine every day.

A ceiling collapse is not a major event, and the effect it has on your operations is minimal. But what happens when the coronavirus pandemic strikes? The museum, cinema, school, and mall are closed. And very few people dare to defy quarantine rules and go to the park. In this case, none of the predictions of your machine learning model turn out to be correct, because it knows nothing about the single factor that overrides all the features it has been trained on.

How humans deal with unusual events

Unlike the unfortunate incident at the museum, the coronavirus pandemic is what many call a black swan event , a very unusual incident that has a huge and unpredictable impact across all sectors. And narrow AI systems , what we have today, are very bad at dealing with the unpredictable and unusual. Your AI is not the only one that is failing. Fraud detection systems, spam and content moderation systems, inventory management, automated trading, and all machine learning models that had been trained on our usual life patterns are breaking.

We humans, too, are confounded when faced with unusual events. But we have been blessed with intelligence that extends way beyond pattern-recognition and rule-matching. We have all sorts of cognitive abilities that enable us to invent and adapt ourselves to our ever-changing world.

Back to our bottled water business. Realizing that your precious machine learning algorithm won’t help you during the coronavirus lockdown, you scrap it and rely on your own world knowledge and common sense to find a solution.

You know that people won’t stop drinking water when they stay at home. So you pivot from vending machines to selling bottled water online and delivering it to customers at their homes. Soon, the orders start coming in, and your business is booming again.

While AI failed you when the coronavirus pandemic struck, you know that it’s not useless and can still be of much help. As your online business grows, you decide to create a new machine-learning algorithm that predicts how much water each district will consume on a daily basis. Good luck!

For the moment, what we have are AI systems that can perform specific tasks in limited environments. One day, maybe, we will achieve artificial general intelligence (AGI) , computer software that has the general problem-solving capabilities of the human mind. That’s the kind of AI that can innovate and quickly find solutions to pandemics and other black swan events.

Until then, as the coronavirus pandemic has highlighted, artificial intelligence will be about machines complementing human efforts, not replacing them.

This article was originally published by Ben Dickson on TechTalks , a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here .

IBM bets on AI and robotics to speed up drug discovery

So you’re interested in AI? Then join our online event, TNW2020 , where you’ll hear how artificial intelligence is transforming industries and businesses.

An end-to-end, integrated chemical research system unveiled by IBM last week gives us a glimpse of how artificial intelligence, robotics and the cloud might change the future of drug discovery.

And it’s a good time as any to see some a breakthrough in the field.

The world is still struggling with the COVID-19 pandemic, and the race to the find a vaccine for the dangerous novel coronavirus has not yet yielded reliable results. Researchers are bound by travel and social distancing limitations imposed by the virus, and for the most part, they still rely on manual methods that can take many years. While in some cases, such delays can result in inconvenience, in the case of COVID-19, it means more lives lost.

Called RoboRXN, IBM’s new system leverages deep learning algorithms , IBM’s cloud, and robotic labs to automate the entire process and assist chemists in their work without requiring physical presence in a research lab. After seeing the presentation by IBM Research, I would describe RoboRXN as an example of bringing together the right pieces to solve a pressing problem.

It’s not yet clear whether this or any of the other efforts led by other large tech companies will help facilitate in developing the coronavirus vaccine. But they will surely help lay the groundwork for the next generation of drug and chemicals research tools and make sure we are more prepared in the future.

Using AI for chemical synthesis and retrosynthesis

IBM’s RoboRXN is the culmination of three years of research and development in applying AI to chemical research. In 2017, the company developed an AI system for predicting chemical reactions in forward synthesis.

Hypothesizing about chemical reactions and experimenting with different chemical components is one of the most time-consuming parts of chemical research. It requires a lot of experience, and chemists usually specialized in specific fields, making it challenging for them to tackle new tasks.

IBM’s AI is a neural machine translation system tailored to chemical synthesis. Artificial neural networks have made great inroads in natural language processing in recent years. While neural networks do not understand the context of human language , their broader capabilities in processing sequential data can serve many fields, including chemical research.

For instance, recurrent neural networks (RNN) and transformers can perform sequence-to-sequence mapping. Train an RNN on a set of input strings and their corresponding output strings, and it will find statistical correlations that map the inputs to outputs (you still need quality data, though). These strings can contain any kind of symbols, including letters, musical notes, or character representations of atoms and molecules. As long as there is consistency in the data and there are patterns to be learned, the neural network can find a way to map the inputs to the outputs.

Trained on a dataset of more than 2 million chemical reactions, the neural network was first introduced in a paper presented by the IBM Research team at the NIPS 2017 AI conference. The next year, IBM developed the AI into RXN for Chemistry, a cloud-based platform for chemical research, and presented it at the American Chemical Society annual exposition. RXN for Chemistry aids chemists in predicting the likely outcome of chemical reactions, saving research time, and reducing the years it takes to acquire experience.

In 2019, the IBM Research team improved the AI behind RXN for Chemistry to also support retrosynthesis. This is the inverse process of chemical synthesis. In this case, you already know the molecular structure you want to achieve. The AI must predict the series of steps and chemical components needed to reach the desired result.

“The retrosynthesis planning model models were developed in collaboration with retrosynthesis experts from the University of Pisa, who constantly gave us feedback how to improve our models,” Teodoro Laino, the manager of IBM Research Zurich, told TechTalks .

IBM RXN for Chemistry also has the possibility to design retrosynthetic routes in an interactive mode.

In the interactive mode, the human chemist goes through the route step by step, getting suggestions by the AI at each stage. “Chemical synthesis becomes a human-AI interaction game,” Laino says.

Bringing the AI pieces together

Philippe Schwaller, predoctoral researcher at IBM Research Zurich, told TechTalks that the final AI system used in RoboRXN is composed of several sequence-to-sequence transformer models, each performing one part of the task.

“Given a target molecule, RoboRXN breaks it down in multiple recipe steps using predictions by a retro reaction prediction and a pathway scoring model until the system finds commercially available molecules,” Schwaller said. “Then, for each step in the recipe, the reaction equations are converted using another seq-2-seq transformer model to all necessary actions, which the robot has to perform, to successfully run the chemical reaction. This model predicts reaction conditions (e.g. temperature, duration) for the different actions (e.g. add, stir, filter).”

In the process of creating the AI, the team published their findings in several peer-reviewed journals and made their AI models available on a GitHub repository . Their latest paper, published in Nature in July , explores the use of transformers to translate the chemical experiments written in open-prose to distinct steps. This is a key component in integrating the AI system with robo-labs, which expect distinct commands.

“For a given target molecule, RoboRXN provides not only a recipe made of multiple chemical reactions that would lead from commercially available molecules to the target molecule, but is also able to generate for each step in the recipe, the specific actions that a robot or human has to perform to successfully run the reaction step,” Laino says.

To draw an analogy with cooking, if you ask the system how to cook pizza, one AI layer will predict the ingredients, and a second will predict the sequence of operations to go from the ingredients to the final dish.

“In all cases, the AI can choose between several predictions. We provide the ones with the highest confidence score, but a user can always override the recommendations and give human feedback,” Laino says. In Wednesday’s presentation, the team showed how a user could jump in the process by adding, removing, or modifying the steps predicted by the neural networks.

Tackling explainability issues

A pure neural network–based approach comes with some benefits. The AI models scale well with the availability of data. And the system will benefit from all the research going into deep learning in general and transformers in particular.

But deep learning comes with interpretability challenges. Neural networks are very good at finding and exploiting correlations between different data points in their training corpus, but those correlations do not necessarily have causal value can yield erroneous results. The scientists employing the system should be able to explore and correct the reasoning used by the AI system.

The fact that the system provides a step-by-step procedure of creating the target molecule provides a level of explainability, making it easier for scientists to review the entire process. But the IBM researchers acknowledged that providing more granular explanations of the individual steps is still a work in progress.

Schwaller told TechTalks that the team has investigated BERT and ALBERT, two other transformer-based neural network architectures, to improve the interpretability of the predictions, classify them into named reactions, and link the predicted reactions back to similar reactions in the patents. The researchers have published their findings in two separate papers published in the ChemRxiv preprint server.

“Recently, we have also investigated why language models learn organic chemistry and chemical reactions so well and discovered that, without human labelling or supervision Transformer models capture how atoms rearrange during a chemical reaction,” Laino adds. “From this so-called atom-mapping signal we can extract the rules and grammar of chemical reactions and make our prediction models more interpretable.”

The team has developed a visualization tool for the RXN AI models and made it available online.

Integration with the robotics lab

The original idea for the fully automated chemistry lab came when IBM presented RXN for Chemistry at the American Chemical Society annual exposition in 2018. “It was surprising to see that irrespective of the flaws that every data-driven model has (including RXN) the reaction of the chemical community was overwhelming—we actually had a line of people at our booth to try out demo,” Laino said. “We saw the real potential in front of us. I asked myself: Can an AI model drive an autonomous chemical lab?”

After discussing the idea with the rest of the team, the idea of RoboRXN was conceived. “The rest was only an intense but gratifying run to build everything: the remaining AI models, the integration of commercially existing hardware and the deployment of all services in the cloud,” Laino says.

During the online presentation, Laino and his team ran a hypothetical experiment with RoboRXN. A user connected to the IBM Cloud application and provided a target molecule to RoboRXN. The AI system processed the request and provided a suggestive instruction set for the experiment. After the user tweaked and confirmed the result, the instructions RoboRXN fed the commands to the robotic research lab and the experiment was kicked off. A live camera view allowed us to follow the steps as the robotic lab conducted the experiments.

The hardware used in the project is already commercially available, making it possible to integrate it with robotic labs organizations already have in place.

“Rather than developing our own hardware we decided to use industry standard hardware and use AI and Cloud to solve the issue of programming and accessing the robot remotely,” Laino said. “The project is hardware agnostic. Different types of hardware can be easily interfaced.”

The team also envisions RoboRXN to scale and run parallel experiments. Research labs can use the platform to coordinate operations across multiple labs and speed up the process of testing hypothesis and gathering the results.

Research during the pandemic and beyond

Automated tools such as RoboRXN could give a boost to research labs and scientists who have been constrained by the covid-19 lockdown.

“The pandemic rang a bell to each of us on how to integrate all existing digital solutions to avoid similar disruption in the future. Lab chemists, even today, are facing severe limitations to come back to work,” said Matteo Manica, machine learning researcher at IBM. “Computational scientists can work remotely, accessing supercomputing resources available online. We decided to provide the same at the level of a chemical lab. A chemical laboratory accessible remotely, that is supervised by AI and executed by robotic chemical hardware.”

But the benefits can go beyond just providing remote access and help direct the cognitive capacity of human scientists where it is needed most.

“RoboRXN can be considered for chemists what robotic vacuum cleaners are for humans. They do not necessarily make things faster, but they make things in a very reproducible way and during their work, you can focus on doing something else,” Laino said.

The increased adoption of automated labs will also generate more digital data, which can help improve the performance of the AI models in the future. Organizations can use the IBM Cloud to run RoboRXN and store the results obtained from the robotic labs. Alternatively, they can have the entire system installed on-premise or in a private cloud. IBM does not currently have plans to use data obtained from RoboRXN to finetune its AI models. Researchers using the platform can, however, integrate their own results with other open datasets and use them to train the deep learning models IBM has publicly made available.

This article was originally published by Ben Dickson on TechTalks , a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here .

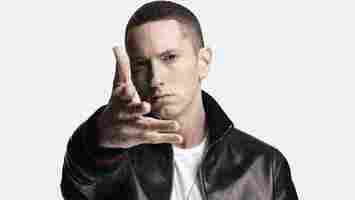

Watch AI Eminem diss the patriarchy in new music video

Eminem has an illustrious history of rap battles, but his controversial insults can infuriate sensitive listeners.

In recent weeks, a TikTok campaign called for Em to be “canceled” for glorifying violence against women in his single “Love the Way You Lie.”

Inevitably, Marshall Mathers fired back with a diss track taking aim at his critics. But he could surely find some more deserving adversaries than TikTok teens.

A new AI music video gives the rapper a more progressive target for his ire: the patriarchy.

The track was created by Calamity Ai, the same group who used bots to produce a new song for Hamilton and a previous Eminem diss of Mark Zuckerberg .

The team penned their latest lyrics using ShortlyAI , a text-generator powered by OpenAI’s GPT-3.

They prompted the system to spit out a verse based on the following cue:

They then sent the lyrics to 30HZ , a self-professed creator of “synthetic parody songs and other poorly written material.” The producer synthesized the audio and converted the words into vocals.

And just in case Slim Shady needed back-up, the team generated a guest verse by another unlikely defender of women’s rights: Kanye West.

I’m pretty impressed by Em’s synthetic voice, although the lyrics are hit and miss at best.

He reserves most of his vitriol for producer Rick Rubin, a surprising choice given the litany of musicians accused of sexual abuse .

Still, Em comes across as refreshingly contrite when he discusses “the consequences when I spit poetry,” which he says are worth the risk if he “silences some men.”

Kanye West’s artificial voice is less convincing, and his verse isn’t going to get any lyrical awards. He does offer an apology to Taylor West, but begins it with a typically sexist, “Yeah, bitch.”

The track will hardly shake up the patriarchy, although the lyrics are still sharper than, say, Lil Pump’s.

But could the AI Eminem win a rap battle against its human counterpart? I doubt it, but the showdown could help make Marshall relevant again.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here .