AI finds 250 foreign stars that migrated to our galaxy

Astrophysicians have used AI to discover 250 new stars in the Milky Way, which they believe were born outside the galaxy.

Caltech researcher Lina Necib named the collection Nyx, after the Greek goddess of the night. She suspects the stars are remnants of a dwarf galaxy that merged with the Milky Way many moons ago.

To develop the AI, Necib and her team first tracked stars across a simulated galaxy created by the Feedback in Realistic Environments (FIRE) project. They labeled the stars as either born in the host galaxy, or formed through galaxy mergers. These labels were used to train a deep learning model to spot where a star was born.

They then applied the model to data collected by the Gaia satellite, which was launched in 2013 to create a detailed 3D map of around 1 billion stars.

“We asked the neural network, ‘Based on what you’ve learned, can you label if the stars were accreted or not?'” said Necib .

AI enters the cosmos

The team first checked if the model could spot known features in the data, such as “the Gaia sausage,” the remnants of a dwarf galaxy that smashed into the Milky Way billions of years ago. The collision ripped the dwarf apart and scattered its stars into the shape of a sausage.

Not only did the AI detect the distinctive sausage shape — it also found the new cluster of 250 stars.

“Your first instinct is that you have a bug,” Necib remembered. “And you’re like, ‘Oh no!’ So, I didn’t tell any of my collaborators for three weeks. Then I started realizing it’s not a bug, it’s actually real and it’s new.”

After confirming that the collection had never been discovered, Necib got to name it, “the most exciting thing in astrophysics,” she said. She now plans to further explore Nyx, and try to work out exactly where the stars were born.

Eureka: A family of computer scientists developed a blueprint for machine consciousness

Renowned researchers Manuel Blum and Lenore Blum have devoted their entire lives to the study of computer science with a particular focus on consciousness. They’ve authored dozens of papers and taught for decades at prestigious Carnegie Mellon University. And, just recently, they published new research that could serve as a blueprint for developing and demonstrating machine consciousness.

That paper , titled “A Theoretical Computer Science Perspective on Consciousness,” may only a be a pre-print paper, but even if it crashes and burns at peer-review (it almost surely won’t) it’ll still hold an incredible distinction in the world of theoretical computer science.

The Blum’s are joined by a third collaborator, one Avrim Blum, their son. Per the Blum’s paper:

Hats off to the Blums, there can’t be too many theoretical computer science families at the cutting-edge of machine consciousness research. I’m curious what the family pet is like.

Let’s move on to the paper shall we? It’s a fascinating and well-explained bit of hardcore research that very well could change some perspectives on machine consciousness.

Per the paper:

In this context, a CTM would appear to be any machine that can demonstrate consciousness. The big idea here isn’t necessarily the development of a thinking robot, but more so a demonstration of the core concepts of consciousness in hopes we’ll gain a better understanding of our own.

This requires the reduction of consciousness to something that can be expressed in mathematical terms. But it’s a little more complicated than just measuring waves. Here’s how the Blum’s put it:

Defining consciousness is only half the battle – and one that likely won’t be won until after we’ve aped it. The other side of of the equation is observing and measuring consciousness. We can watch a puppy react to stimulus. Even plant consciousness can be observed. But for a machine to demonstrate consciousness its observers have to be certain it isn’t merely imitating consciousness through clever mimicry.

Let’s not forget that GPT-3 can blow even the most cynical of minds with its uncanny ability to seem cogent, coherent, and poignant (let us also not forget that you have to hit “generate new text” a bunch of times to get it to do so because most of what it spits out is garbage).

The Blums get around this problem by designing a system that’s only meant to demonstrate consciousness. It won’t try to act human or convince you it’s thinking. This isn’t an art project. Instead, it works a bit like a digital hourglass where each grain of sand is information.

The machine sends and receives information in the form of “chunks” that contain simple pieces of information. There can be multiple chunks of information competing for mental bandwidth, but only one chunk of information is processed at a time. And, perhaps most importantly, there’s a delay in sending the next chunk. This allows chunks to compete – with the loudest, most important one often winning.

The winning chunks form the machine’s stream of consciousness. This allows the machine to demonstrate adherence to a theory of time and for it to experience the mechanical equivalent of pain and pleasure. According to the researchers, the competing chunks would have greater weight if the information they carried indicated the machine was in extreme pain:

A machine programmed with such a stream of consciousness would effectively have the bulk of its processing power (mental bandwidth) taken up by extreme amounts of pain. This, in theory, could motivate it to repair itself or deal with whatever’s threatening it.

But, before we get that far, we’ll need to actually figure out if reverse-engineering the idea of consciousness down to the equivalent of high-stakes reinforcement learning is a viable proxy for being alive.

You can read the whole paper here .

For more coverage on robot brains check out Neural’s optimistic speculation on machine sentience in our newest series here .

China’s prototype Mars helicopter looks strikingly familiar…

Did you know Neural is taking the stage this fall ? Together with an amazing line-up of experts, we will explore the future of AI during TNW Conference 2021. Secure your online ticket now !

China is developing a Mars helicopter — and it looks extremely similar to NASA’s Ingenuity.

A prototype of the “ Mars surface cruise drone” was unveiled on Wednesday by China’s National Space Science Center (CNNSC).

The announcement was spotted by SpaceNews’ Andrew Jones, who described the chopper as “more Familiarity than Ingenuity.”

My Mandarin’s a little rusty, but according to Google Translate, the chopper is equipped with a micro spectrometer.

CNNSC didn’t reveal the purpose of the system, but spectroscopy has a rich history on Mars. Spectrometers have previously provided evidence of both flowing liquid water and methane on the planet.

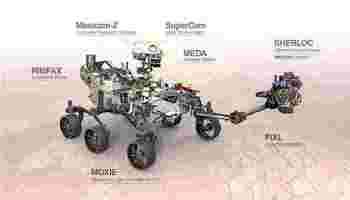

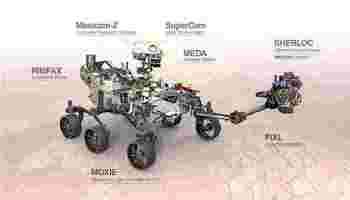

Spectrometers are also attached to two rovers that are currently exploring Mars: China’s Zhurong and NASA’s Perseverance .

Zhurong is equipped with a laser-induced spectrometer that’s investigating the make-up of rocks.

Perseverance, meanwhile, has two of the instruments: an X-ray fluorescence spectrometer that’s analyzing Martian surface materials, and a UV spectrometer that’s searching for signs of past microbial life.

The objectives of China’s new Mars drone, however, remain unclear for now. Whatever they are, the rotorcraft will have big footsteps to follow.

Its lookalike, Ingenuity , has long surpassed NASA’s initial goal of completing five test flights. The chopper has now completed a dozen trips, while capturing stunning images of the red planet en-route.

NASA says the helicopter is paving the way for future rotorcraft missions on Mars. The image of China’s new prototype suggests that may well be the case.

HT — SpaceNews .

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here .