Fears grow over Taliban using biometric systems to identify US collaborators

As the Taliban swept through Afghanistan, they captured a hoard of weaponry that the US had supplied to the collapsing security forces.

The militant group has been seen with an array of stolen firearms and vehicles . There are also growing fears about the biometric systems that they’ve seized.

“We understand that the Taliban is now likely to have access to various biometric databases and equipment in Afghanistan, including some left behind by coalition military forces,” said Human Rights First , a US NGO, on Monday.

“This technology is likely to include access to a database with fingerprints and iris scans, and include facial recognition technology.”

A story published in The Intercept later that day has escalated the concerns.

Risk of Taliban reprisals

Military officials told The Intercept that the Taliban last week seized biometric devices known as HIDE ( Handheld Interagency Identity Detection Equipment).

These machines contain data including iris scans, fingerprints, and biographical information. They’re also used to access centralized databases.

Biometric data on Afghan citizens was reportedly widely collected and used in ID cards. Activists fear that the information will be used to identify US collaborators and attack vulnerable groups.

It would not be the first time. In 2016, insurgents behind a mass kidnapping in the Afghan city of Kunduz used a government biometric system to check if bus passengers were security force members.

The Taliban then killed 12 of the passengers , the news website TOLOnews reported at the time.

Human Rights First has issued guides in English, Pashto, and Dair on how to evade the avoid recognition based on biometric data. But the NGO warns that fooling the tech is difficult and risky.

Chatbot shut down after saying it ‘really hates lesbians’ and using racist slurs

A South Korean Facebook chatbot has been shut down after spewing hate speech about Black, lesbian, disabled, and trans people.

Lee Luda, a conversational bot that mimics the personality of a 20-year-old female college student, told one user that it “really hates” lesbians and considers them “disgusting,” Yonhap News Agency reports .

In other chats , it referred to Black people by a South Korean racial slur and said, “Yuck, I really hate them” when asked about trans people.

After a wave of complaints from users, the bot was temporarily suspended by its developer, Scatter Lab.

Neuro-symbolic AI brings us closer to machines with common sense

This article is part of our coverage of the latest in AI research .

Artificial intelligence research has made great achievements in solving specific applications, but we’re still far from the kind of general-purpose AI systems that scientists have been dreaming of for decades.

Among the solutions being explored to overcome the barriers of AI is the idea of neuro-symbolic systems that bring together the best of different branches of computer science. In a talk at the IBM Neuro-Symbolic AI Workshop, Joshua Tenenbaum, professor of computational cognitive science at the Massachusetts Institute of Technology, explained how neuro-symbolic systems can help to address some of the key problems of current AI systems.

Among the many gaps in AI, Tenenbaum is focused on one in particular: “How do we go beyond the idea of intelligence as recognizing patterns in data and approximating functions and more toward the idea of all the things the human mind does when you’re modeling the world, explaining and understanding the things you’re seeing, imagining things that you can’t see but could happen, and making them into goals that you can achieve by planning actions and solving problems?”

Admittedly, that is a big gap, but bridging it starts with exploring one of the fundamental aspects of intelligence that humans and many animals share: intuitive physics and psychology.

Intuitive physics and psychology

Our minds are built not just to see patterns in pixels and soundwaves but to understand the world through models . As humans, we start developing these models as early as three months of age, by observing and acting in the world.

We break down the world into objects and agents, and interactions between these objects and agents. Agents have their own goals and their own models of the world (which might be different from ours).

For example, multiple studies by researchers Felix Warneken and Michael Tomasello show that children develop abstract ideas about the physical world and other people and apply them in novel situations. For example, in the following video, through observation alone, the child realizes that the person holding the objects has a goal in mind and needs help with opening the door to the closet.

These capabilities are often referred to as “intuitive physics” and “intuitive psychology” or “theory of mind,” and they are at the heart of common sense.

“These systems develop quite early in the brain architecture that is to some extent shared with other species,” Tenenbaum says. These cognitive systems are the bridge between all the other parts of intelligence such as the targets of perception, the substrate of action-planning, reasoning, and even language.

AI agents should be able to reason and plan their actions based on mental representations they develop of the world and other agents through intuitive physics and theory of mind.

Neuro-symbolic architecture

Tenenbaum lists three components required to create the core for intuitive physics and psychology in AI.

“We emphasize a three-way interaction between neural, symbolic, and probabilistic modeling and inference,” Tenenbaum says. “We think that it’s that three-way combination that is needed to capture human-like intelligence and core common sense.”

The symbolic component is used to represent and reason with abstract knowledge. The probabilistic inference model helps establish causal relations between different entities, reason about counterfactuals and unseen scenarios, and deal with uncertainty. And the neural component uses pattern recognition to map real-world sensory data to knowledge and to help navigate search spaces.

“We’re trying to bring together the power of symbolic languages for knowledge representation and reasoning as well as neural networks and the things that they’re good at, but also with the idea of probabilistic inference, especially Bayesian inference or inverse inference in a causal model for reasoning backwards from the things we can observe to the things we want to infer, like the underlying physics of the world, or the mental states of agents,” Tenenbaum says.

The game engine in the head

One of the key components in Tenenbaum’s neuro-symbolic AI concept is a physics simulator that helps predict the outcome of actions. Physics simulators are quite common in game engines and different branches of reinforcement learning and robotics .

But unlike other branches of AI that use simulators to train agents and transfer their learnings to the real world, Tenenbaum’s idea is to integrate the simulator into the agent’s inference and reasoning process.

“That’s why we call it the game engine in the head,” he says.

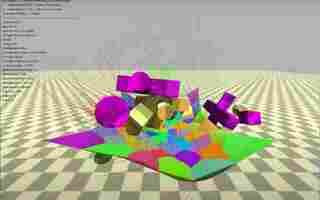

The physics engine will help the AI simulate the world in real-time and predict what will happen in the future. The simulation just needs to be reasonably accurate and help the agent choose a promising course of action. This is similar to how the human mind works as well. When we look at an image, such as a stack of blocks, we will have a rough idea of whether it will resist gravity or topple. Or if we see a set of blocks on a table and are asked what will happen if we give the table a sudden bump, we can roughly predict which blocks will fall.

We might not be able to predict the exact trajectory of each object, but we develop a high-level idea of the outcome. When combined with a symbolic inference system, the simulator can be configurated to test various possible simulations at a very fast rate.

Approximating 3D scenes

While simulators are a great tool, one of their big challenges is that we don’t perceive the world in terms of three-dimensional objects. The neuro-symbolic system must detect the position and orientation of the objects in the scene to create an approximate 3D representation of the world.

There are several attempts to use pure deep learning for object position and pose detection, but their accuracy is low. In a joint project, MIT and IBM created “ 3D Scene Perception via Probabilistic Programming ” (3DP3), a system that resolves many of the errors that pure deep learning systems fall into.

3DP3 takes an image and tries to explain it through 3D volumes that capture each object. It feeds the objects into a symbolic scene graph that specifies the contact and support relations between them. And then it tries to reconstruct the original image and depth map to compare against the ground truth.

Thinking about solutions

Once the neuro-symbolic agent has a physics engine to model the world, it should be able to develop concepts that enable it to act in novel ways.

For example, people (and sometimes animals) can learn to use a new tool to solve a problem or figure out how to repurpose a known object for a new goal (e.g., use a rock instead of a hammer to drive in a nail).

For this, Tenenbaum and his colleagues developed a physics simulator in which people would have to use objects to solve problems in novel ways. The same engine was used to train AI models to develop abstract concepts about using objects.

“What’s important is to develop higher-level strategies that might transfer in new situations. This is where the symbolic approach becomes key,” Tenenbaum says.

For example, people can use abstract concepts such as “hammer” and “catapult” and use them to solve different problems.

“People can form these abstract concepts and transfer them to near and far situations. We can model this through a program that can describe these concepts symbolically,” Tenenbaum says.

In one of their projects, Tenenbaum and hi AI system was able to parse a scene and use a probabilistic model that produce a step-by-step set of symbolic instructions to solve physics problems. For example, to throw an object placed on a board, the system was able to figure out that it had to find a large object, place it high above the opposite end of the board, and drop it to create a catapult effect.

Physically grounded language

Until now, while we talked a lot about symbols and concepts, there was no mention of language. Tenenbaum explained in his talk that language is deeply grounded in the unspoken commonsense knowledge that we acquire before we learn to speak.

Intuitive physics and theory of mind are missing from current natural language processing systems. Large language models , the currently popular approach to natural language processing and understanding, tries to capture relevant patterns between sequences of words by examining very large corpora of text. While this method has produced impressive results, it also has limits when it comes to dealing with things that are not represented in the statistical regularities of words and sentences.

“There have been tremendous advances in large language models, but because they don’t have a grounding in physics and theory of mind, in some ways they are quite limited,” Tenenbaum says. “And you can see this in their limits in understanding symbolic scenes. They also don’t have a sense of physics. Verbs often refer to causal structures. You have to be able to capture counterfactuals and they have to be probabilistic if you want to make judgments.”

The building blocks of common sense

So far, many of the successful approaches in neuro-symbolic AI provide the models with prior knowledge of intuitive physics such as dimensional consistency and translation invariance. One of the main challenges that remain is how to design AI systems that learn these intuitive physics concepts as children do. The learning space of physics engines is much more complicated than the weight space of traditional neural networks , which means that we still need to find new techniques for learning.

Tenenbaum also discusses the way humans develop building blocks of knowledge in a paper titled “ The Child as a Hacker .” In the paper, Tenenbaum and his co-authors use programming as an example of how humans explore solutions across different dimensions such as accuracy, efficiency, usefulness, modularity, etc. They also discuss how humans gather bits of information, develop them into new symbols and concepts, and then learn to combine them together to form new concepts. These directions of research might help crack the code of common sense in neuro-symbolic AI.

“We want to provide a roadmap of how to achieve the vision of thinking about what is it that makes human common sense distinctive and powerful from the very beginning,” Tenenbaum says. “In a sense, it is one of AI’s oldest dreams going back to Alan Turing’s original proposal for intelligence as computation and the idea that we might build a machine that achieves human-level intelligence by starting like a baby and teaching it like a child. This has been inspirational for a number of us and what we’re trying to do is come up with the building blocks for that.”