Google says it won’t build new AI solutions for the fossil fuel industry

File this under: minimal possible effort. Google today confirmed it will “no longer develop artificial intelligence (AI) software and tools for oil and gas drilling operations.”

Will Grannis, Managing Director of the Office of the CTO at Google Cloud, spoke with CUBE’s John Furrier earlier this month in an interview where he revealed the end of Google‘s future involvement with fossil fuel extraction.

As The Hill’s Alexandra Kelly reports , this move comes hot on the heels of a Greenpeace expose showing Google as one of the top three developers of AI and machine learning solutions for the fossil fuel industry.

Kelly wrote:

It appears as though Google‘s reached the magical apex where profits and public opinion line up – in this case it ain’t making what it used to off the fossil fuel industry and people’s opinions on big oil are degrading over time. Now’s probably the most logical time to cut those ties.

Besides, as the Greepeace report shows, big oil still has Microsoft and Amazon to help with its AI needs:

Credit: Greenpeace

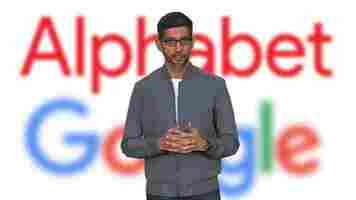

Quick take: Google doesn’t have anything to lose here. Not to mention it hasn’t yet committed to not renewing its legacy contracts with big oil, so who knows whether this gesture has any merit or not. Like everything with the Mountain View company under CEO Sundar Pichai‘s leadership, we’ll have to wait and see if the company sticks to its guns here.

If this is the start of Google and Alphabet extricating themselves entirely from working with big oil, it deserves a hearty tip of the hat. I’ll keep mine on until we see more evidence than a company statement.

TNW reached out to Google for comment and we’ll update this article if we hear back.

This open-source AI tool can make your video spectacular with sky replacement effects

Sky replacement tools are extremely popular in the current crop of photp-editing apps. Mobile apps such as Lighttricks’ Lightleap help you put up a new sky on your picture quickly. While for a more refined option, you may want to turn to Adobe Photoshop’s recently-released AI-powered feature .

However, there aren’t many commercially available tools for such video effects. A new open-source tool takes a crack at replacing the sky with AR effects in clips. The unique thing about this AI-powered tool is that it can also augment objects in the sky and adjust the footage as if it was actually present during the shooting.

The model is independent of the shooting source. So, you can use footage captured even from your phone. Plus, you can use a photo or a video in place of the sky. It can even create a Skybox effect to make your video look larger than life. Imagine sticking up a giant spaceship in a clip of your road trip!

There are a couple of limitations to this model. First, it’s trained only for the daytime sky. So, if your video was shot at night, the tool will not work effectively. Second, if the sky was clear with no object in sight, it might replace some of the foregrounds as well. Not an ideal result for video editors.

However, this is a great starting point for potential mainstream tools to experiment with and integrate into their products.

You can read the full paper titled “Castle in the Sky” here . You can even give it a spin on your Windows laptop by following this tutorial .

A beginner’s guide to AI: Policy

Welcome to Neural’s beginner’s guide to AI. This long-running series should provide you with a very basic understanding of what AI is, what it can do, and how it works.

In addition to the article you’re currently reading, the guide contains articles on (in order published) neural networks , computer vision , natural language processing , algorithms , artificial general intelligence , the difference between video game AI and real AI , the difference between human and machine intelligence , and ethics .

In this edition of the guide, we’ll take a glance at global AI policy.

The US, China, Russia, and Europe each approach artificial intelligence development and regulation differently. In the coming years it will be important for everyone to understand what those differences can mean for our safety and privacy.

Yesterday

Artificial intelligence has traditionally been swept in with other technologies when it comes to policy and regulation.

That worked well in the days when algorithm-based tech was mostly used for data processing and crunching numbers. But the deep learning explosion that began around 2014 changed everything.

In the years since, we’ve seen the inception and mass adoption of privacy-smashing technologies such as virtual assistants , facial recognition , and online trackers.

Just a decade ago our biggest privacy concerns, as citizens, involved worrying about the government tracking us through our cell phone signals or snooping on our email.

Today, we know that AI trackers are following our every move online. Cameras record everything we do in public, even in our own neighborhoods , and there were at least 40 million smart speakers sold in Q4 of 2020 alone.

Today

Regulators and government entities around the world are trying to catch up to the technology and implement polices that make sense for their particular brand of governance.

In the US, there’s little in the way of regulation . In fact the US government is highly invested in many AI technologies the global community considers problematic. It develops lethal autonomous weapons (LAWS) , its policies allow law enforcement officers to use facial recognition and internet crawlers without oversight, and there are no rules or laws prohibiting “ snake oil ” predictive AI services.

In Russia, the official policy is one of democratizing AI research by pooling data. A preview of the nation’s first AI policy draft indicates Russia plans to develop tools that allow its citizens to control and anonymize their own data.

However, the Russian government has also been connected to adversarial AI ops targeting governments and civilians around the globe. It’s difficult to discern what rules Russia‘s private sector will face when it comes to privacy and AI.

And, to the best of our knowledge, there’s no declassified data on Russia‘s military policies when it comes to the use of AI. The best we can do is speculate based on past reports and statements made by the country’s current leader, Vladmir Putin.

Putin, speaking to Russian students in 2017 , said “whoever becomes the leader in this sphere will become the ruler of the world.”

China, on the other hand, has been relatively transparent about it’s AI programs. In 2017 China released the world’s first robust AI policy plan incorporating modern deep learning technologies and predicted future machine learning tech.

The PRC intends on being the global leader in AI technology by 2030. It’s program to achieve this goal includes massive investments from the private sector, academia, and the government.

US military leaders believe China’s military policies concerning AI are aimed at the development of LAWS that don’t require a human in the loop.

Europe‘s vision for AI policy is a bit different. Where the US, China, and Russia appear focused on the military and global competitive-financial aspects of AI, the EU is defining and crafting policies that put privacy and citizen-safety at the forefront.

In this respect, the EU currently seeks to limit facial recognition and other data-gathering technologies and to ensure citizens are explicitly informed when a product or service records their information.

The future

Predicting the future of AI policy is a tricky matter. Not only do we have to take into account how each nation currently approaches development and regulation, but we have to try to imagine how AI technology itself will advance in each country.

Let’s start with the EU:

In Russia, of course, things are different:

Moving to China, the future’s a bit easier to predict:

And that just brings us to the US:

At the end of the day, it’s impossible to make strong predictions because politicians around the globe are still generally ignorant when it comes to the reality of modern AI and the most-likely scenarios for the future.

Technology policy is often a reactionary discipline: countries tend to regulate things only after they’ve proven problematic. And, we don’t know what major events or breakthroughs could prompt radical policy change for any given nation.

In 2021, the field of artificial intelligence is at an inflection point. We’re between eurekas, waiting on autonomy to come of age, and hoping that our world leaders can come to a safe accord concerning LAWS and international privacy regulations.