Mushrooms on Mars is a hoax — stop believing hack ‘scientists’

There is no evidence that mushrooms or any other form of life exists on Mars.

The Mars rover did not take a picture of fungi growing on the planet. And so-called ‘scientists’ did not explain the existence of fungi on Mars in a research paper.

If there are mushrooms on Mars, the people on our planet have yet to uncover any evidence of their existence.

But what about the headlines?

Simply put: they’re bunk. As far as we can tell, all of the recent articles discussing the “discovery of fungi” on Mars are based on a recently published research paper discussing photographs from the Mars rover.

The paper is titled “Fungi on Mars? Evidence of Growth and Behavior From Sequential Images.” And it’s a doozy.

Per the paper:

Okay. Uh… check please ?

The only thing the researchers appear to get right is that some fungi do thrive in radiation-filled environments. But those environments are all on Earth. The persistent radiation at the Chernobyl site , for example, is not equal to, analogous to, or equivalent to the radiation we find in deep space or on Mars.

After that, the researchers claim that NASA images show the Mars rover destroying a patch of fungi with its tracks and a subsequent new patch of fungi growing in its wake.

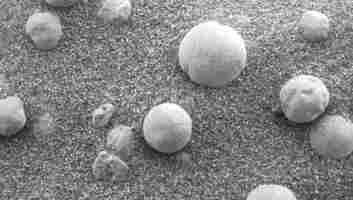

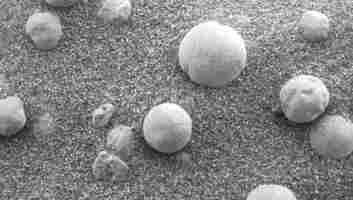

Here’s the pics:

If you believe those images demonstrate fungus growing on Mars, I’m about to blow your frickin’ mind. Check out this pic:

You see that? To heck with fungus, that’s an entire highway growing out of the sand in front of a moving bus. You can clearly see that the Earth’s sandy crust is being broken apart as the expanding highway organism grows beneath it.

Or, if you’re the “Occam’s Razor” type: the wind is just blowing sand around .

I’ve never been to Mars, but I’m led to believe there are rocks, dust, and wind. Do we really need to go any further in debunking this nonsense?

You can read the whole paper here . But before you do, may I suggest you read about the history of its lead author ?

This ‘scientist’ has been pushing this same theory for a long time and the most recent paper is just more junk.

At the end of the day, science isn’t done by looking at an image, coming to a conclusion, and then listing pre-existing facts about mushrooms until you’ve convinced yourself there’s life on an alien planet and NASA’s trying to cover it up.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here .

Programming in ‘natural’ language is coming sooner than you think

Sometimes major shifts happen virtually unnoticed. On May 5, IBMannounced Project CodeNet to very little media or academic attention.

CodeNet is a follow-up to ImageNet , a large-scale dataset of images and their descriptions; the images are free for non-commercial uses. ImageNet is now central to the progress of deep learning computer vision .

CodeNet is an attempt to do for Artificial Intelligence (AI) coding what ImageNet did for computer vision: it is a dataset of over 14 million code samples, covering 50 programming languages, intended to solve 4,000 coding problems. The dataset also contains numerous additional data, such as the amount of memory required for software to run and log outputs of running code.

Accelerating machine learning

IBM’s own stated rationale for CodeNet is that it is designed to swiftly update legacy systems programmed in outdated code , a development long-awaited since the Y2K panic over 20 years ago , when many believed that undocumented legacy systems could fail with disastrous consequences.

However, as security researchers, we believe the most important implication of CodeNet — and similar projects — is the potential for lowering barriers, and the possibility of Natural Language Coding (NLC).

In recent years, companies such as OpenAI and Googlehave been rapidly improving Natural Language Processing (NLP) technologies. These are machine learning-driven programs designed to better understand and mimic natural human language and translate between different languages. Training machine learning systems require access to a large dataset with texts written in the desired human languages. NLC applies all this to coding too.

Coding is a difficult skill to learn let alone master and an experienced coder would be expected to be proficient in multiple programming languages. NLC, in contrast, leverages NLP technologies and a vast database such as CodeNet to enable anyone to use English, or ultimately French or Chinese or any other natural language, to code. It could make tasks like designing a website as simple as typing “make a red background with an image of an airplane on it, my company logo in the middle and a contact me button underneath,” and that exact website would spring into existence, the result of automatic translation of natural language to code.

It is clear that IBM was not alone in its thinking. GPT-3, OpenAI’s industry-leading NLP model, has been used to allow coding a website or app by writing a description of what you want . Soon after IBM’s news, Microsoft announced it had secured exclusive rights to GPT-3 .

Microsoft also owns GitHub, — the largest collection of open source code on the internet — acquired in 2018. The company has added to GitHub’s potential with GitHub Copilot , an AI assistant. When the programmer inputs the action they want to code, Copilot generates a coding sample that could achieve what they specified. The programmer can then accept the AI-generated sample, edit it or reject it, drastically simplifying the coding process. Copilot is a huge step towards NLC, but it is not there yet.

Consequences of natural language coding

Although NLC is not yet fully feasible, we are moving quickly towards a future where coding is much more accessible to the average person. The implications are huge.

First, there are consequences for research and development. It is argued that the greater the number of potential innovators, the higher the rate of innovation . By removing barriers to coding, the potential for innovation through programming expands.

Further, academic disciplines as varied as computational physics and statistical sociology increasingly rely on custom computer programs to process data. Decreasing the skill required to create these programs would increase the ability of researchers in specialized fields outside computer sciences to deploy such methods and make new discoveries.

However, there are also dangers. Ironically, one is the de-democratization of coding. Currently, numerous coding platforms exist. Some of these platforms offer varied features that different programmers favor, however, none offer a competitive advantage. A new programmer could easily use a free, “bare bones” coding terminal and be at a little disadvantage.

However, AI at the level required for NLC is not cheap to develop or deploy and is likely to be monopolized by major platform corporations such as Microsoft, Google or IBM. The service may be offered for a fee or, like most social media services, for free but with unfavorable or exploitative conditions for its use.

There is also reason to believe that such technologies will be dominated by platform corporations due to the way machine learning works. Theoretically, programs such as Copilot improve when introduced to new data: the more they are used, the better they become. This makes it harder for new competitors, even if they have a stronger or more ethical product.

Unless there is a serious counter effort, it seems likely that large capitalist conglomerates will be the gatekeepers of the next coding revolution.

Article by David Murakami Wood , Associate Professor in Sociology, Queen’s University, Ontario and David Eliot , Masters Student, Surveillance Studies, Queen’s University, Ontario

This article is republished from The Conversation under a Creative Commons license. Read the original article .

Spotify’s new Only You feature will tell you that you’re special, but you’re probably not

If you ever feel like a boring normie, Spotify wants to let you know that at least your taste in music is special.

The streaming giant today launched Only You , a new feature that generates personalized playlists and pays you fawning compliments for your “unique” listening habits.

The experience is similar to Spotify Wrapped, with a visual breakdown of your streaming patterns, a Stories-like interface, and new insights about your tastes.

You can find a “unique audio pairing” of tracks you play back-to-back, songs you listen to at certain times of the day, and the years of musical history that you frequently stream.

Another highlight lets you choose three artists you’d invite to your dream dinner party. Spotify will then create a personalized playlist for each of them.

There’s also an “audio birth chart” that shows the artist you’ve listened to most over the past six months, a musician you’ve recently discovered, and one that “best shows your emotional or vulnerable side.”

The feature has a shareable format that could prove a hit on social media. It also provides another way for Spotify to signal that its algorithms promote diverse listening, rather than homogenize popular music. But Only You’s evidence for your individuality is questionable at best.

I wouldn’t consider playing Marvin Gaye’s Inner City Blues (Make Me Wanna Holler) “My Unique Moment,” for instance. I imagine it’s one that’s been shared by many people who are justifiably despairing about the state of the world.

There’s also an irony in habits that are so often guided by algorithms being complimented as unique . In a sense, by praising you, Spotify is also commending itself.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here .