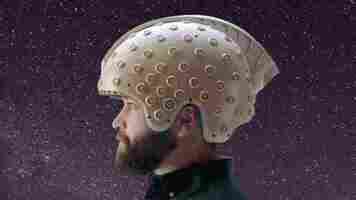

New AI headset analyzes astronauts’ brains to prep them for long-term space travel

A SpaceX Dragon rocket is due to take off this week with some unusual cargo on board: an EEG headset.

The device will fly to the International Space Station (ISS) for a first-of-its-kind experiment.

The mission? Analyzing the neurological activity of astronauts to understand how microgravity affects the brain.

There is still little known about the effects of space travel on the brain. While astronauts are typically measured for various physiological changes, from heart rate to muscle mass, there is currently no high-quality longitudinal data about neural changes during space missions.

This information could be crucial in understanding how the brain adapts to long-term space travel.

“In future missions, the journeys will last much longer, and the effects of microgravity on the condition of astronauts will have a major impact,” said Yair Levy, the CEO of brain.space , the company behind the device.

“Then we will have a tool that can measure the impact on cognition — and we can invent tools that can regain cognitive capacity during the mission.”

The first step towards this goal is testing the headset on the astronauts of Axiom-1 (AX-1), the world’s first all-private mission to the ISS.

The brain.space system uses electroencephalography (EEG) to pick up tiny electrical signals produced when neurons in the brain communicate with each other. AI then denoises the signals and interprets the data.

Previous neural studies in space used low-resolution gel-based EEG systems, but these were complex to set up and only measured basic brain signals.

Brain.space replaced the gel with a dry system comprised of around 500 sensors that look like tiny brushes. The company says this makes the headset easier to use and more effective.

The device has already taken baseline measurements of the astronauts’ cognition on Earth. Upon arrival at the ISS, the system’s software will be set up on a laptop at the space station.

Each of the three crew members will then wear the device for three 20-minute periods spread across the eight-day mission. Data collected in orbit will be transferred to brain.space and Ben-Gurion University researchers after each session for analysis.

After the astronauts return to Earth, the same experiments will be performed to assess the after-effects of microgravity.

Brain.space was initially founded to analyze neurological activity during treatment for brain injuries.

Ultimately, the company wants to develop a big data platform that researchers, developers, and medical practitioners can use to integrate brain activity into their products and services.

The company hopes the ISS experiment will join the list of space projects that have improved life on Earth . But for now, it’s focused on preparing our brains for long-term space travel and off-world living.

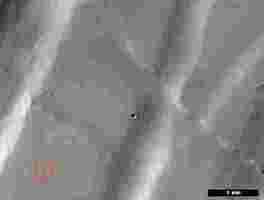

NASA releases images of the first craters on Mars discovered by AI

NASA has unveiled images of the first-ever craters on Mars discovered by AI.

The system spotted the craters by scanning photos by NASA’s Mars Reconnaissance Orbiter, which was launched in 2005 to study the history of water on the red planet.

The cluster it detected was created by several pieces of a single meteor, which had shattered into pieces while flying through the Martian sky at some point between March 2010 and May 2012. The fragments landed in a region called Noctis Fossae, a long, narrow, shallow depression on Mars. They left behind a series of craters spanning about 100 feet (30 meters) of the planet’s surface.

The largest of the craters was about 13 feet (4 meters) wide — a relatively small dent that is tricky for the human eye to spot.

Scientists would normally search for these craters by laboriously scanning through images captured by the NASA Mars Reconnaissance Orbiter’s Context Camera with their own eyes. The system takes low-resolution pictures of the planet covering hundreds of miles at a time, and it takes a researcher around 40 minutes to scan a single one of the images.

To save time and increase the number of future findings, scientists and AI researchers at NASA’s Jet Propulsion Laboratory (JPL) in Southern California teamed up to develop a tool called the automated fresh impact crater classifier.

They trained the classifier by feeding it 6,830 Context Camera images. This dataset contained a range of previously confirmed craters, as well as pictures with no fresh impacts to show the AI what not to look for.

They then applied the classifier to the Context Camera’s entire repository of about 112,000 images. This cut the 40 minutes it typically takes a scientist to check the image down to an average of just five seconds. However, the classifier still needed a human to check its work.

“AI can’t do the kind of skilled analysis a scientist can,” said JPL computer scientist Kiri Wagstaff. “But tools like this new algorithm can be their assistants. This paves the way for an exciting symbiosis of human and AI ‘investigators’ working together to accelerate scientific discovery.”

Finally, the team used NASA’s HiRISE camera, which can spot features as small as a kitchen table, to confirm that the dark smudge they had spotted was indeed a cluster of craters.

The classifier currently runs on dozens of high-performance computers at the JPL. Now, the team wants to develop similar systems that can be used on-board Mars orbiters.

“The hope is that in the future, AI could prioritize orbital imagery that scientists are more likely to be interested in,” said Michael Munje, a Georgia Tech graduate student who worked on the classifier.

The researchers believe the tool could paint a fuller picture of meteor impacts on Mars, which could contain geological clues about life on the planet.

Dutch predictive policing tool ‘designed to ethnically profile,’ study finds

A predictive policing system used in the Netherlands discriminates against Eastern Europeans and treats people as “human guinea pigs under mass surveillance,” new research by Amnesty International has revealed.

The “Sensing Project” uses cameras and sensors to collect data on vehicles driving in and around Roermond, a small city in the southeastern Netherlands. An algorithm then purportedly calculates the probability that the driver and passengers intend to pickpocket or shoplift, and directs police towards the people and places it deems “high risk.”

The police present the project as a neutral system guided by objective crime data. But Amnesty found that it’s specifically designed to identify people of Eastern European origin — a form of automated ethnic profiling.

The project focuses on “mobile banditry,” a term used by Dutch law enforcement to describe property crimes, such as pickpocketing and shoplifting. Police claim that these crimes are predominantly committed by people from Eastern European countries — particularly those of Roma ethnicity, a historically marginalized group. Amnesty says law enforcement “explicitly excludes crimes committed by people with a Dutch nationality from the definition of ‘mobile banditry’.”

The watchdog discovered that these biases are deliberately embedded in the predictive policing system:

The investigation also found that the system creates many false positives, that police haven’t demonstrated its effectiveness, and that no one in Roermond had consented to the project.

“The Dutch authorities must call for a halt to the Sensing Project and similar experiments, which are in clear violation of the right to privacy, the right to data protection and the principles of legality and non-discrimination,” said Merel Koning, senior policy officer of technology and human rights at Amnesty.

So you’re interested in AI? Then join our online event, TNW2020 , where you’ll hear how artificial intelligence is transforming industries and businesses.