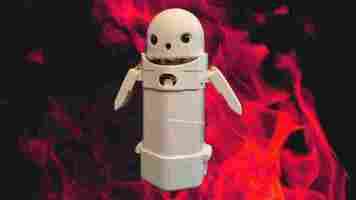

This tiny robot is supposed to stop you from revenge texting

It isn’t every day you stumble across the weirdest robot ever. But if you can guess this little machine’s function just by looking at it, you’ve officially won the internet for today:

No, it doesn’t vibrate. But you’re close. The researchers call it “ OMOY: A Handheld Robotic Gadget that Shifts its Weight to Express Emotions and Intentions .”

Apparently , its purpose is to read your texts aloud and calm you down when you get upset. It does this by moving a 250g weight around inside its torso area.

The researchers, a team from the University of Tsukuba, claim the physical sensation of feeling a weight shift inside the robot adds gravitas to what it says.

Per their recently-published paper :

So, the big idea here is that the robot reads your text messages aloud and then offers an opinion on how you should feel about the message. All while moving a weight around inside its body.

That might not strike you as the be-all-end-all cure for social toxicity, but the researchers claim it reduces anger and revenge texting:

We could get into the details concerning how the team conducted the study wherein they arrived at these results, but the gist of it is that they asked people how they felt after holding a wiggly robot and pretending to hear angry texts.

According to the study participants, holding the robot while hearing negative texts made them feel less angry.

That actually makes a lot of sense. We’re guessing that, instead of anger, they just felt really weird about taking advice from an undulating, 3D-printed phallus.

An absolute beginner’s guide to deep learning with Python

Teaching yourself deep learning is a long and arduous process. You need a strong background in linear algebra and calculus , good Python programming skills, and a solid grasp of data science, machine learning, and data engineering. Even then, it can take more than a year of study and practice before you reach the point where you can start applying deep learning to real-world problems and possibly land a job as a deep learning engineer.

Knowing where to start, however, can help a lot in softening the learning curve. If I had to learn deep learning with Python all over again, I would start with Grokking Deep Learning , written by Andrew Trask. Most books on deep learning require a basic knowledge of machine learning concepts and algorithms. Trask’s book teaches you the fundamentals of deep learning without any prerequisites aside from basic math and programming skills.

The book won’t make you a deep learning wizard (and it doesn’t make such claims), but it will set you on a path that will make it much easier to learn from more advanced books and courses.

Building an artificial neuron in Python

Most deep learning books are based on one of several popular Python libraries such as TensorFlow, PyTorch, or Keras. In contrast, Grokking Deep Learning teaches you deep learning by building everything from scratch, line by line.

You start with developing a single artificial neuron, the most basic element of deep learning . Trask takes you through the basics of linear transformations, the main computation done by an artificial neuron. You then implement the artificial neuron in plain Python code, without using any special libraries.

This is not the most efficient way to do deep learning, because Python has many libraries that take advantage of your computer’s graphics card and parallel processing power of your CPU to speed up computations. But writing everything in vanilla Python is excellent for learning the ins and outs of deep learning.

In Grokking Deep Learning , your first artificial neuron will take a single input, multiply it by a random weight, and make a prediction. You’ll then measure the prediction error and apply gradient descent to tune the neuron’s weight in the right direction. With a single neuron, single input, and single output, understanding and implementing the concept becomes very easy. You’ll gradually add more complexity to your models, using multiple input dimensions, predicting multiple outputs, applying batch learning, adjusting learning rates, and more.

And you’ll implement every new concept by gradually adding and changing bits of Python code you’ve written in previous chapters, gradually creating a roster of functions for making predictions, calculating errors, applying corrections, and more. As you move from scalar to vector computations, you’ll shift from vanilla Python operations to Numpy, a library that is especially good at parallel computing and is very popular among the machine learning and deep learning community.

Deep neural networks with Python

With the basic building blocks of artificial neurons under your belt, you’ll start creating deep neural networks , which is basically what you get when you stack several layers of artificial neurons on top of each other.

As you create deep neural networks, you’ll learn about activation functions and apply them to break the linearity of the stacked layers and create classification outputs. Again, you’ll implement everything yourself with the help of Numpy functions. You’ll also learn to compute gradients and propagate errors through layers to spread corrections across different neurons.

As you get more comfortable with the basics of deep learning, you’ll get to learn and implement more advanced concepts. The book features some popular regularization techniques such as early stopping and dropout. You’ll also get to craft your own version of convolutional neural networks (CNN) and recurrent neural networks (RNN).

By the end of the book, you’ll pack everything into a complete Python deep learning library, creating your own class hierarchy of layers, activation functions, and neural network architectures (you’ll need object-oriented programming skills for this part). If you’ve already worked with other Python libraries such as Keras and PyTorch, you’ll find the final architecture to be quite familiar. If you haven’t, you’ll have a much easier time getting comfortable with those libraries in the future.

And throughout the book, Trask reminds you that practice makes perfect; he encourages you to code your own neural networks by heart without copy-pasting anything.

Code library is a bit cumbersome

Not everything about Grokking Deep Learning is perfect. In a previous post , I said that one of the main things that defines a good book is the code repository. And in this area, Trask could have done a much better job.

The GitHub repository of Grokking Deep Learning is rich with Jupyter Notebook files for every chapter. Jupyter Notebook is an excellent tool for learning Python machine learning and deep learning. However, the strength of Jupyter is in breaking down code into several small cells that you can execute and test independently. Some of Grokking Deep Learning ’s notebooks are composed of very large cells with big chunks of uncommented code.

This becomes especially problematic in the later chapters, where the code becomes longer and more complex, and finding your way in the notebooks becomes very tedious. As a matter of principle, the code for educational material should be broken down into small cells and contain comments in key areas.

Also, Trask has written the code in Python 2.7. While he has made sure that the code also works smoothly in Python 3, it contains old coding techniques that have become deprecated among Python developers (such as using the “ for i in range(len(array)) ” paradigm to iterate over an array).

The broader picture of artificial intelligence

Trask has done a great job of putting together a book that can serve both newbies and experienced Python deep learning developers who want to fill the gaps in their knowledge.

But as Tywin Lannister says (and every engineer will agree), “There’s a tool for every task, and a task for every tool.” Deep learning isn’t a magic wand that can solve every AI problem. In fact, for many problems, simpler machine learning algorithms such as linear regression and decision trees will perform as well as deep learning, while for others, rule-based techniques such as regular expressions and a couple of if-else clauses will outperform both.

The point is, you’ll need a full arsenal of tools and techniques to solve AI problems. Hopefully, Grokking Deep Learning will help get you started on the path to acquiring those tools.

Where do you go from here? I would certainly suggest picking up an in-depth book on Python deep learning such as Deep Learning With PyTorch or Deep Learning With Python . You should also deepen your knowledge of other machine learning algorithms and techniques. Two of my favorite books are Hands-on Machine Learning and Python Machine Learning .

You can also pick up a lot of knowledge by browsing machine learning and deep learning forums such as the r/MachineLearning and r/deeplearning subreddits, the AI and deep learning Facebook group , or by following AI researchers on Twitter.

The AI universe is vast and quickly expanding, and there is a lot to learn. If this is your first book on deep learning, then this is the beginning of an amazing journey.

This article was originally published by Ben Dickson on TechTalks , a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here .

AI tool predicts Arctic sea ice loss caused by climate change

Did you know Neural is taking the stage this fall ? Together with an amazing line-up of experts, we will explore the future of AI during TNW Conference 2021. Secure your online ticket now !

Scientists have built an AI tool that forecasts Arctic sea ice conditions, which could help protect local wildlife and people from changes caused by global warming.

The deep learning system, named IceNet, was developed by a research team led by British Antarctic Survey (BAS) and The Alan Turing Institute.

The model was trained on climate simulations and observational data to forecast the next six months of sea ice concentration maps.

In tests, the system was almost 95% accurate in predicting whether sea ice will be present two months ahead.

Study lead author Tom Andersson said IceNet outperforms the leading physics-based models — and runs thousands of times faster:

AI takes on global warming

The Arctic has warmed at around two to three times the rate of the global average. As a result, the size of sea ice in the summer is half of what it was just 40 years ago.

The decline is having a huge impact on local people and wildlife. IceNet could help them adapt to future changes by providing early warnings about the timing and locations of sea ice loss. Per the study paper :

The researchers are now investigating whether adding ice thickness to IceNet’s inputs improves its accuracy. They will also implement an online version of the tool that operates on a daily temporal resolution, which could enhance performance at short lead times.

The system will not prevent global warming , but it could at least mitigate some of the damage.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here .